I built a Kubernetes cluster tonight to try and learn a bit about this technology, here are my notes about building it!

Built on a new ESXI server with the following specs (you’d be surprised how well this rig holds up):

- Dell Optiplex 7010

- Core i7-3770

- 32GB RAM

- 1TB SSD

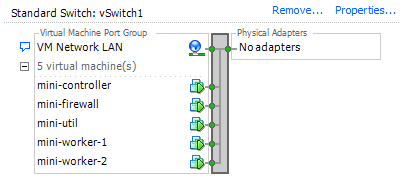

Created virtual network only accessible to VMs:

Used pfSense as firewall to keep independent from network and to provide DHCP to internal virtual network. Used a single Windows Server 2019 VM to access WebGUI to control pfSense. Needlessly complicated to do all of this but I wanted to get a virtual network going to test all of this out on.

Built the following VMs on Ubuntu 22.04 LTS:

- Controller: 2 vCPUs, 4GB RAM, 50GB HDD

- Worker X2: 2 vCPUs, 4GB RAM, 25GB HDD

I then set all their IPs in pfSense to keep them from changing. I then used the following guide, specifically the scripts from github mentioned in the article:

https://devopscube.com/setup-kubernetes-cluster-kubeadm/

Command to download scripts:

git clone https://github.com/techiescamp/kubeadm-scripts

Chmod+x both scripts to set executable. Run the “common.sh” script on all systems, both controller and nodes/workers. Edit the “master.sh” script. Change the “MASTER_IP=” portion to your controller’s IP address. Change the network range as needed for the nodes under “POD_CIDR” (I left these as default). Run the script on the controller.

Run this command to get the join command:

kubeadm token create --print-join-command

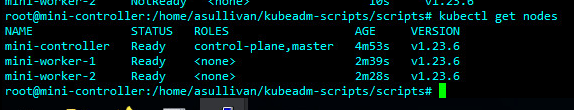

Run the FULL output on any workers/nodes you want to connect to it. Check connection on master/controller:

kubectl get nodesExample output:

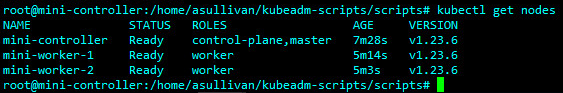

Note that their roles are set to “none”. Fix by running this command, substituting out the names of the nodes/workers:

kubectl label node mini-worker-1 node-role.kubernetes.io/worker=worker

Then check again to confirm they are now workers:

Congrats! You now have a (slightly dirty) Kubernetes cluster running and ready to assign deployments to! This is the bare basics but should get you rolling, good luck and happy tinkering!

Leave a Reply

You must be logged in to post a comment.